vSphere 6 Lab Upgrade – Upgrading ESXi 5.5

I tested vSphere 6 quite intensively when it was in beta, but I didn’t ever upgrade my lab - basically because I need a stable environment to work on and I wasn’t sure that I could maintain that with the beta.

I tested vSphere 6 quite intensively when it was in beta, but I didn’t ever upgrade my lab - basically because I need a stable environment to work on and I wasn’t sure that I could maintain that with the beta.

Checking for driver compatibility

In vSphere 5.5, VMware dropped the drivers for quite a few consumer grade NICs - in 6 they’ve gone a step further and actually blocked quite a few of these using a VIB package. For more information, see this excellent article by Andreas Peetz.

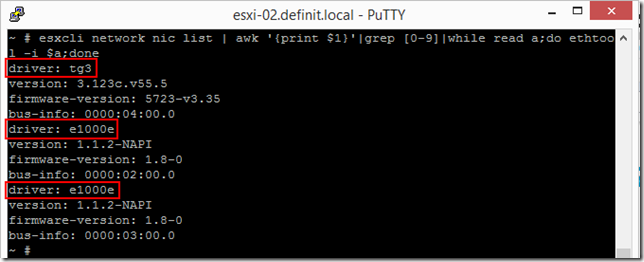

To list the NIC drivers you’re using on your ESXi hosts, use the following command:

esxcli network nic list | awk ‘{print $1}’|grep [0-9]|while read a;do ethtool -i $a;done

As you can see from the results, my HP N54Ls are running 3 NICs, a Broadcom onboard and two Intel PCI NICs. Fortunately the Broadcom chip is supported and the e1000e driver I’m using is compatible with vSphere 6 and is in fact superseded by a native driver package.

Upgrade to ESXi 6 using vSphere Update Manager (VUM)

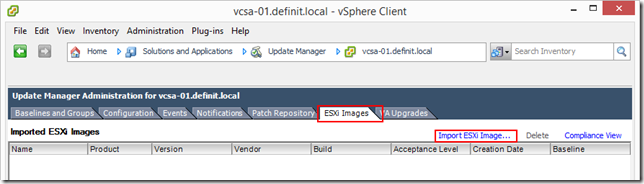

Open the VUM administration console in the vSphere Client - you can’t do this in the Web Client yet. If you’re in Compliance view, use the link in the top right hand corner to get to the Admin view.

Select the ESXi Images tab, and then select the Import ESXi Image… link

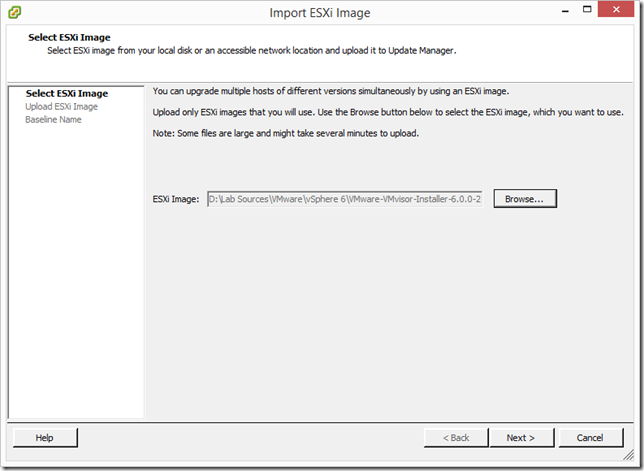

Select the dowloaded ESXi 6 ISO image (VMware-VMvisor-Installer-6.0.0-2494585.x86_64.iso) and click next

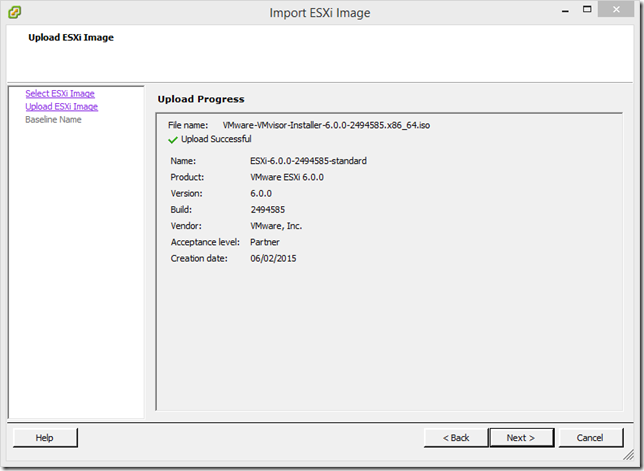

Once the ISO is uploaded a summary is displayed

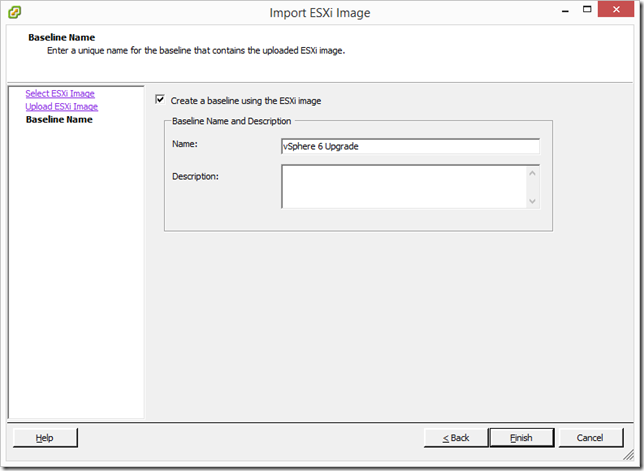

Create a baseline to upgrade

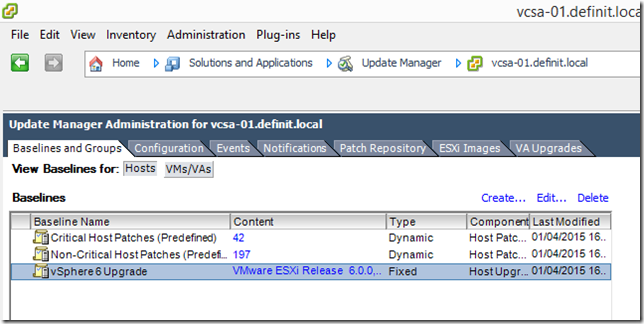

If you select the Baselines and Groups tab you can see the new baseline is available

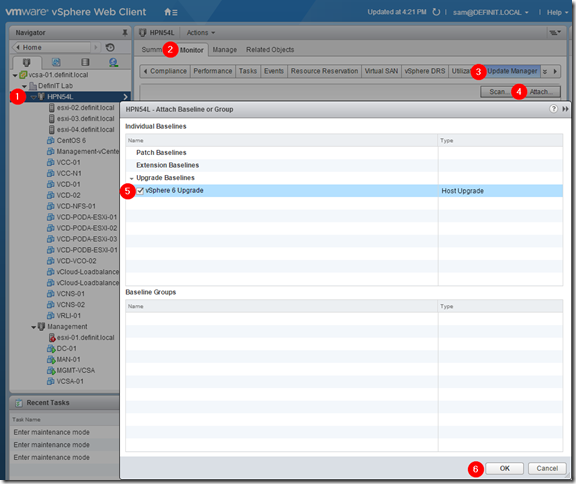

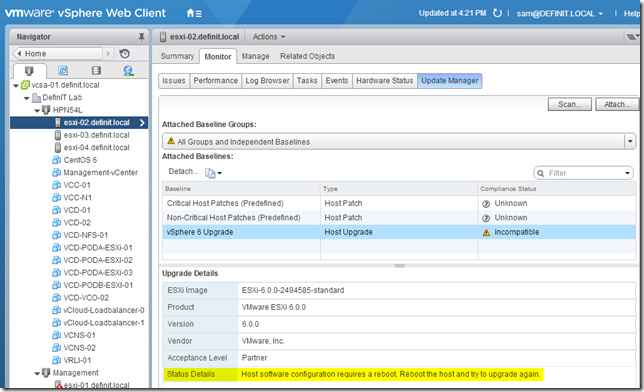

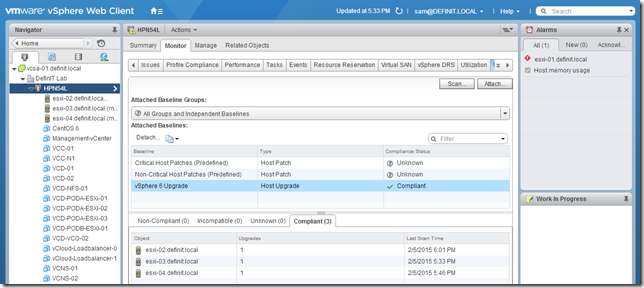

Switch back to the vSphere Web Client and select a cluster to upgrade (1) (I’ll do my HPN54L cluster), select the Monitor tab (2) then the Update Manager tab (3), select Attach (4), check the upgrade baseline (5) and then attach the baseline (6).

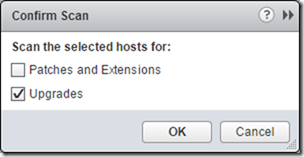

Click on the Scan button and select to scan for Upgrades, then click OK

If you see “Incompatible”, select a host and then Monitor, then Update Manager, select the baseline and view the details - it is most likely to be that your hosts need a reboot

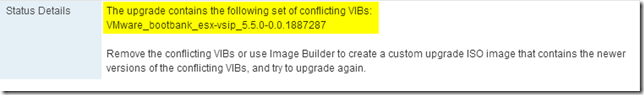

After the reboot I re-scanned the cluster only to see another incompatible message!

The upgrade contains the following set of conflicting vibs: vmware_bootbank_esx-vsip_5.5.0-0.0.1887287

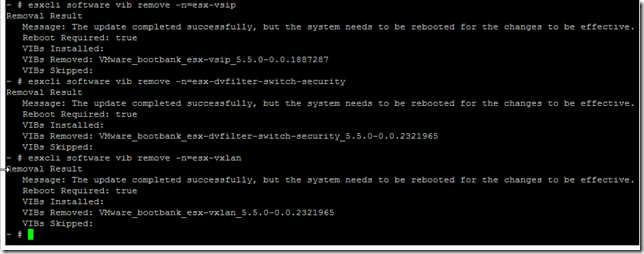

This time it was the NSX host prep VIBs installed - I am not currently using NSX on this cluster, so I removed the VIBs and rebooted once again!

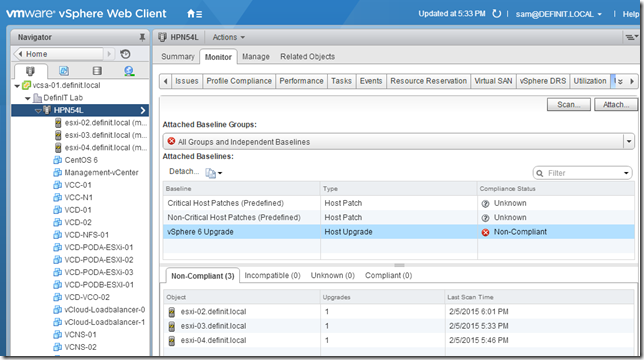

Once the reboot has completed, re-scan the cluster and you should have Non-Compliant status, rather than Incompatible.

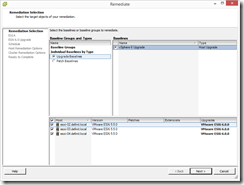

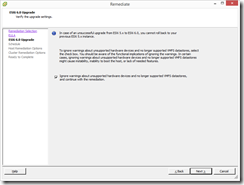

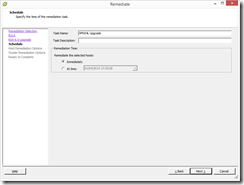

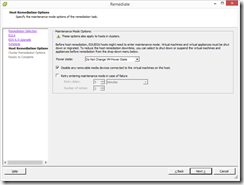

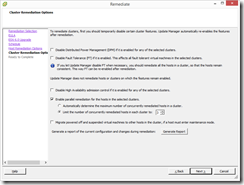

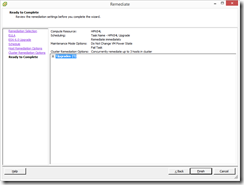

Now you can go ahead and remediate the cluster using the vSphere Client - it’s no different in 6.

It took a good hour or so, but my three hosts were updated successfully!

Update an ESXi hosts using the offline bundle

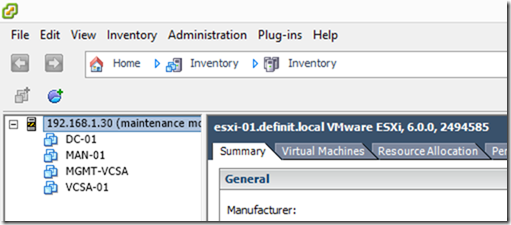

I only have one server in my Management “cluster” at the moment, so I need to upgrade that with my vSphere management components offline. To do this, I’ll use the ESXi Offline Bundle

Download the offline bundle (VMware-ESXi-6.0.0-2494585-depot.zip) and upload it to a datastore that’s accessible by the host to upgrade.

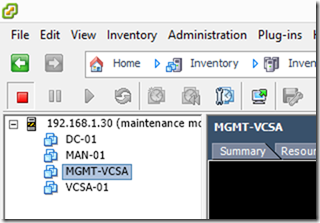

Power down any running VMs and put the host into maintenance mode

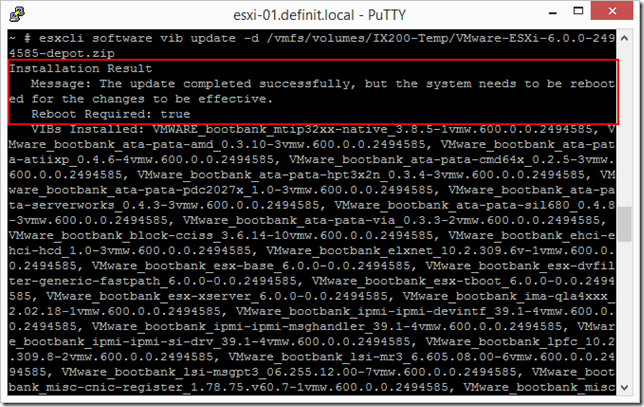

Log on to the host via SSH and update ESXi using the following esxcli command (replacing the path and file with your specific ones of course):

The output will list a lot of VIBs that have been installed, but the first bit tells you it’s been successful and to reboot.

Type “reboot” and enter to reboot the host - when it comes up again.

That’s my lab hosts upgraded to ESXi 6 - stay tuned for the next step - upgrading VSAN 5.5 to 6.

Written by

Written by