DataStore conflicts with an existing DataStore in the DataCenter – Manually disabling Storage I/O Control

I ran into this issue yesterday while reconnecting hosts in our vCenter Server following a complete reinstall - the reasons for which are a long story, but suffice to say that there were new certificates and the host passwords were encrypted with the old ones.

I ran into this issue yesterday while reconnecting hosts in our vCenter Server following a complete reinstall - the reasons for which are a long story, but suffice to say that there were new certificates and the host passwords were encrypted with the old ones.

The LUNs had been unpresented at the hardware level by the storage team, but had not been unmounted or removed from vCenter. This is not the way to remove storage - let me re-iterate: remove storage properly. Unfortunately in this case the storage was removed badly - doing this can lead to a condition called “All Paths Down” or APD which is best explained by Cormac Hogan (@vmwarestorage) in the article Handling the All Paths Down (APD) condition.

When adding the hosts back into the cluster, I received the following message:

Reconnect host HostFQDN - Datastore ‘DatastoreName’ conflicts with an existing datastore in the datacenter that has the same URL (ds:///vmfs/volumes/4f00008-4200009c-0000-5000000ba397/), but is backed by different physical storage.

Looking at the datastore name I realised that the 3 datastores that were causing this issue had been part of a Storage Cluster, so I attempted to remove the storage - but faced this error:

Call “HostDatastoreSystem.RemoveDatastore” for object “datastoreSystem-119955” on vCenter Server “DefinIT-VC01” failed. Cannot remove datastore ‘DefinIT-006’ because storage I/O Control is either enabled or running in statistics collection mode on it. Correct the problem and retry the operation.

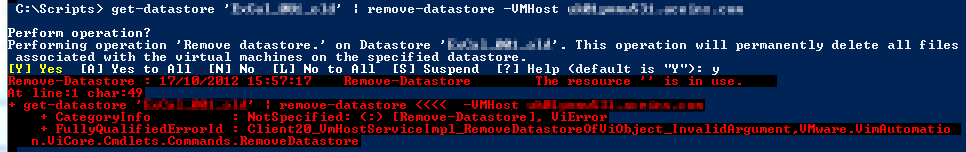

I also attempted to connect directly to the host using the Client and remove the storage - the same error occured. Connecting to the host in Tech Support Mode via SSH and removing with PowerCLI produced similar messages.

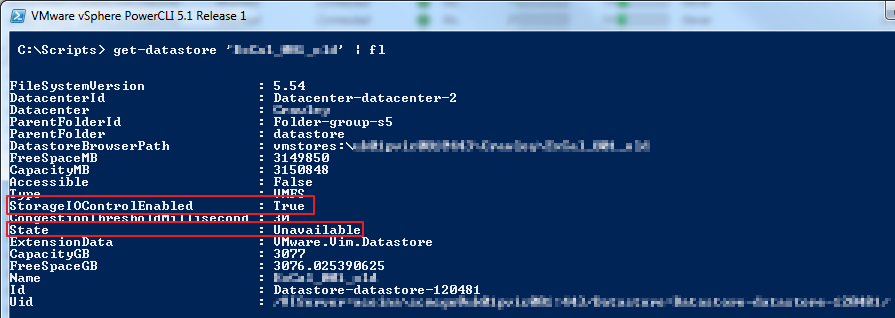

However, I already had the clue I needed to fix the problem - the error message states “storage I/O Control is either enabled or running in statistics collection mode”. Each LUN was marked by SIOC because it was part of a Storage Cluster that leveraged StorageDRS with the I/O metric enabled. I verified this using PowerCLI - “Get-DataStore ‘DefinIT-006’ | fl” - you can see that StorageIOCOntrolEnabled is True and State is Unavailable.

The only way to remove them from this would be to remove them from the Storage Cluster. This would work if the hosts were connected to vCenter, but they were not - and I couldn’t get them into vCenter BECAUSE of this condition! Catch22.

Googleing “manually disable sioc esxi” led me to a virtuallyGhetto article about enabling SIOC without vCenter in which the solution was found!

Manually Disable SIOC on a disconnected LUN and Remove from host

I connected to a host via SSH and began…

First, I needed to find the Device Name of the missing storage using the following command:

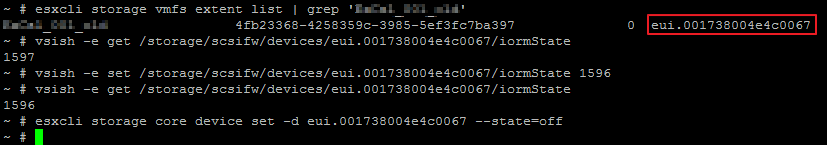

esxcli storage vmfs extent list | grep 'Definit-006'

Armed with the naa (or eui) name for the device I could then get the iormState value for the device:

vsish -e get /storage/scsifw/devices/eui.001738004e4c0068/iormState

This returned a value of 1597 - SIOC is enabled! According to the virtuallyGhetto article, a value of 1956 will disable SIOC, so I used the set command to write the value:

vsish -e set /storage/scsifw/devices/eui.001738004e4c0067/iormState 1596

This completed, I could now move on and attempt to remove the LUN (note that it takes a few seconds to update the setting, don’t attempt to remove the device too quickly!):

esxcli storage core device set -d eui.001738004e4c0067 --state=off

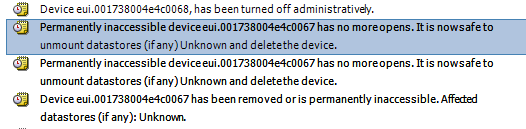

After this, you can either manually rescan all HBAs on the host or I found that after a few minutes they disappeared from the host. Reconnecting the host was a success! I could see in the vCenter Client the progress of my work:

This procedure was required for each LUN on each host that was disconnected and in this state - fortunately only 4 hosts required this work.